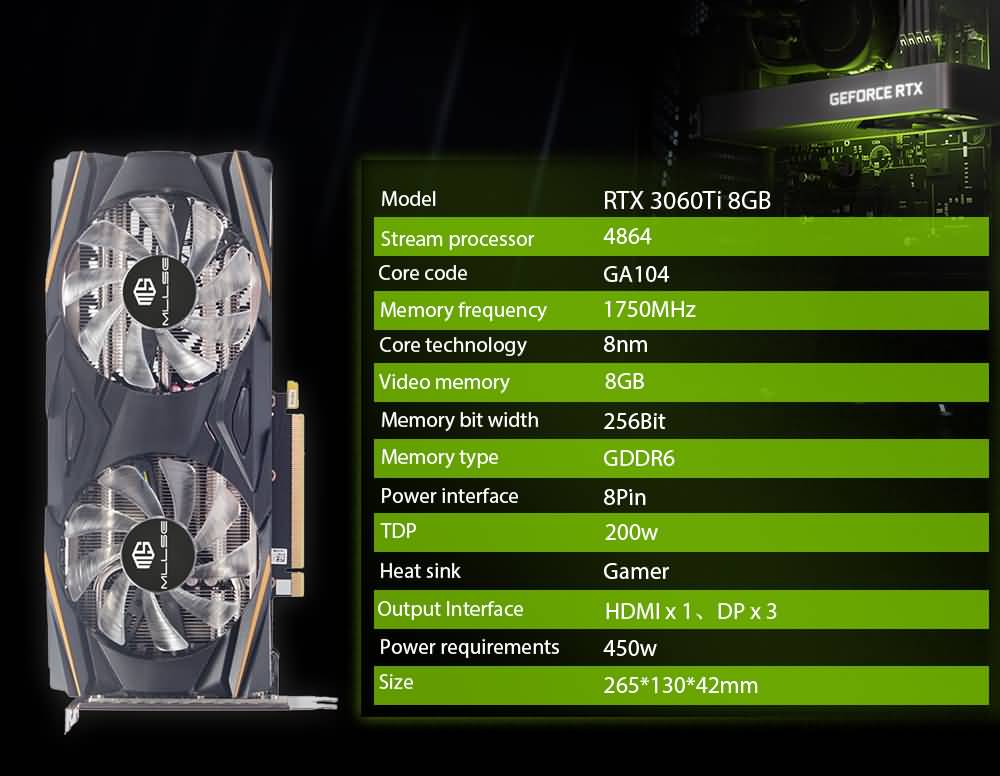

MLLSE New Graphics Card RTX 3060Ti 8GB X-GAME Hynix GDDR6 256bit NVIDIA GPU DP*3 PCI Express 4.0 x16 rtx3060ti 8gb Video card

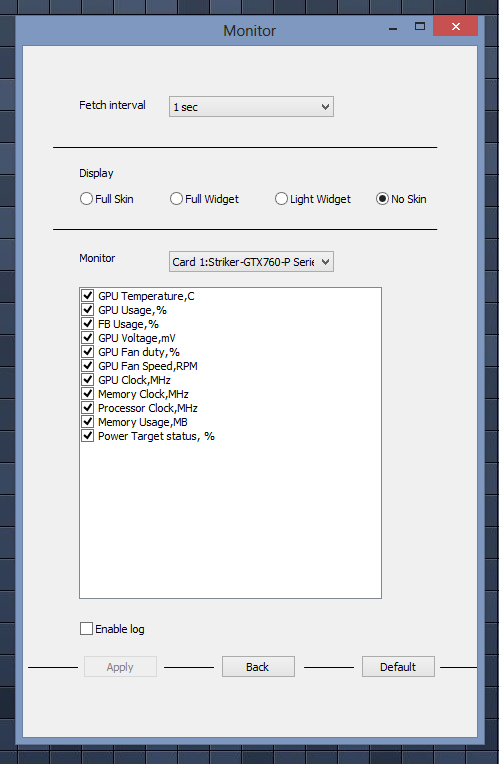

What kind of GPU is the key to speeding up Gigapixel AI? - Product Technical Support - Topaz Discussion Forum

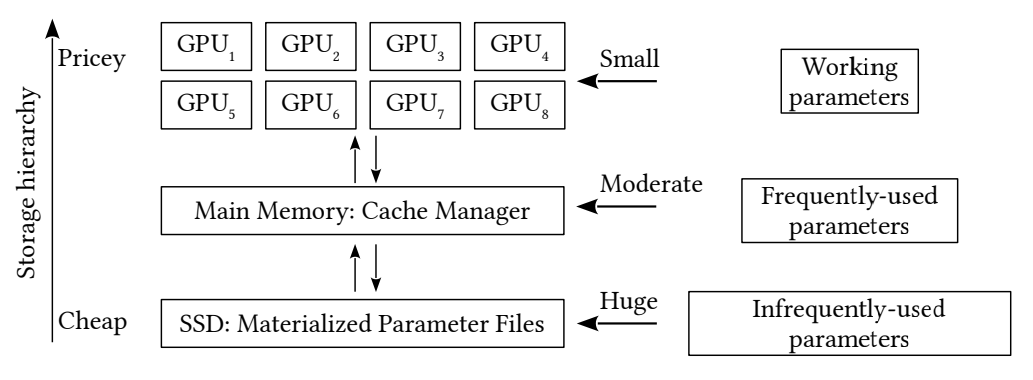

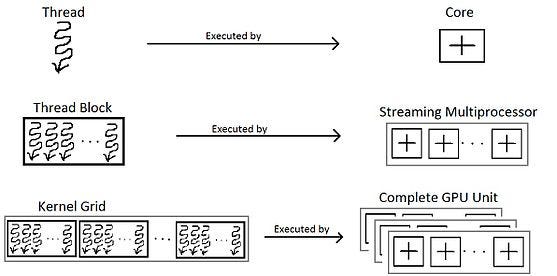

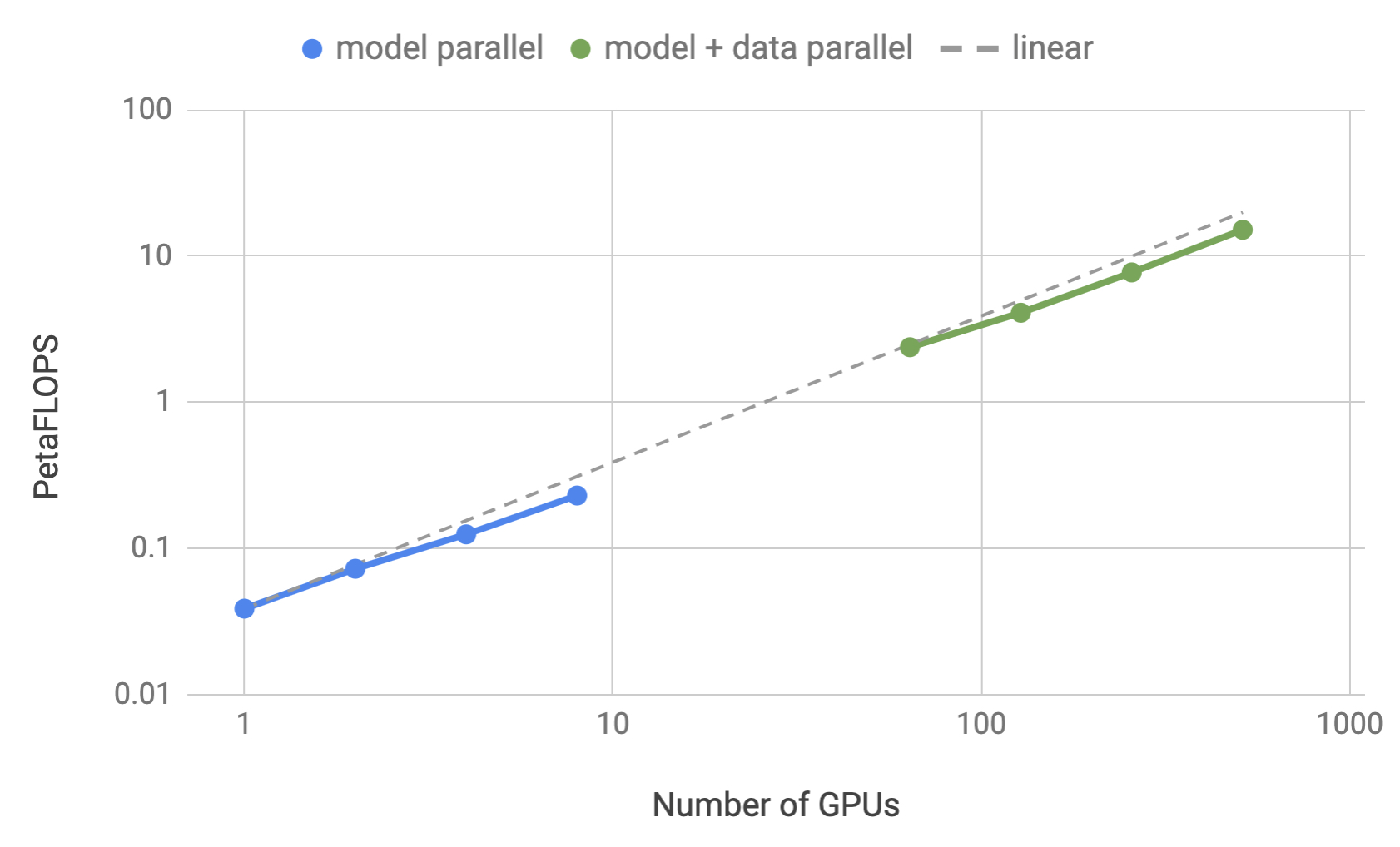

ZeRO-Infinity and DeepSpeed: Unlocking unprecedented model scale for deep learning training - Microsoft Research

Parameters of graphic devices. CPU and GPU solution time (ms) vs. the... | Download Scientific Diagram

NVIDIA, Stanford & Microsoft Propose Efficient Trillion-Parameter Language Model Training on GPU Clusters | Synced

ZeRO-Offload: Training Multi-Billion Parameter Models on a Single GPU | #site_titleZeRO-Offload: Training Multi-Billion Parameter Models on a Single GPU